Many web servers (e.g. Tomcat, Apache) dedicate a thread to every open connection. When a web request comes in, a thread is either created fresh or, if possible, dequeued from a thread pool. The thread performs some computation and I/O, and the thread scheduler puts it to sleep when it blocks for I/O or when it exceeds its quantum. This works well enough, but as more and more threads are created to handle simultaneous connections, the paradigm starts to break down: each new thread adds some overhead, and a large enough number of simultaneous connections will grind the server to a halt.

node.js is a JavaScript environment built from the ground up to use asynchronous I/O. Like other JavaScript implementations it has a single main thread. It achieves concurrency through an API that requires you to provide a callback (a closure) specifying what should happen when any I/O operation completes. Instead of using threads or blocking, node.js uses low-level event primitives to register for I/O notifications. The overhead of context switching and thread tracking is replaced by a simple queue of callbacks. As the events come in, node.js invokes the corresponding callback. The callback then carries on its work until it either begins another I/O request or completes its task, at which point node handles the next event or sleeps while waiting for a new one. Here's a simple web-based "Hello, world!" in node.js from the snap-benchmarks repository:

As you can see, all I/O operations accept a callback as their final argument. Instead of a series of calls that happen to block, we explicitly handle each case where an I/O request completes, giving us code consisting of nested callbacks.

In addition to reducing the strain placed on a system by a large number of threads, this approach also removes the need for thread synchronization - everything is happening in rapid sequence, rather than in parallel, so race conditions and deadlock are not a concern. The disadvantages are that any runaway CPU computation will hose your server (there's only one thread, after all) and that you must write your code in an extremely unnatural style to get it to work at all. The slogan of this blog used to be a famous Olin Shivers quote: "I object to doing things computers can do" - and explicitly handling I/O responses certainly falls into the category of Things a Computer Should Do.

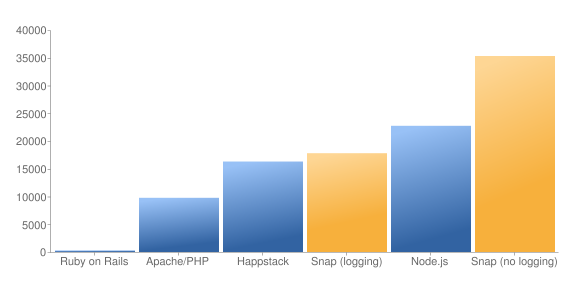

Anyway, this got me thinking about how Haskell could take advantage of the asynchronous I/O paradigm. I was delighted to find that others were thinking the same thing. As it happens, the new I/O manager in GHC 7 uses async I/O behind the scenes - so your existing code with blocking calls automatically takes advantage of the better scalability of asynchronous I/O. The Snap framework has published benchmarks that show it handling about 150% more requests per second than node.js:

But what's even nicer is that there's no need to contort your code to get this performance. Snap and the underlying GHC I/O manager completely abstract away the asynchronous nature of what I'm doing. I don't need to set callbacks because the I/O manager is handling that under the covers. I pretend I'm using blocking I/O and the I/O manager takes care of everything else. I get some defense against long-running computations (because more than one thread is processing the incoming events) and, most importantly, I can write code in what I consider a saner style.

The catch is that most I/O libraries are not designed for asynchronous I/O. So, network and other I/O calls made at the Haskell level will work appropriately, but external libraries (say, MySQL) are often written with blocking I/O at the C level, which can negate some of the scalability gains from asynchronous I/O.